Jake Brawer, Ph.D.

Computer science Ph.D. from Yale.

Postdoc at CU Boulder.

Social Roboticist.

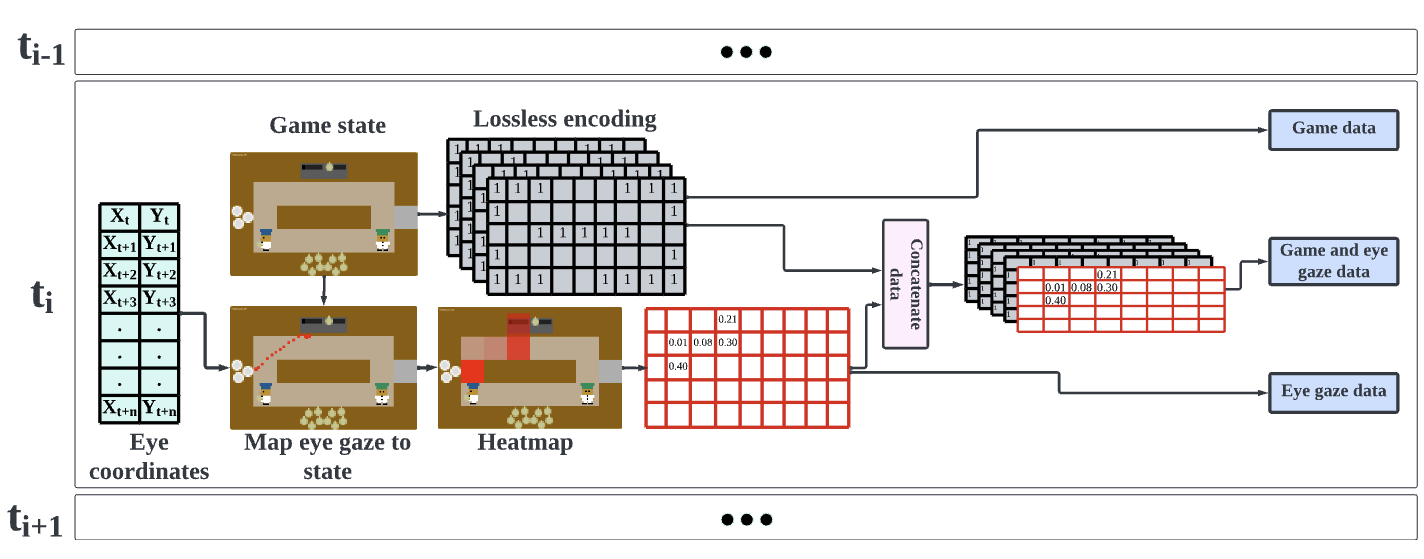

Eyes on the Game: Deciphering Implicit Human Signals to Infer Human Proficiency, Trust, and Intent

Nikhil Hulle, Stéphane Aroca-Ouellette, Anthony J Ries, Jake Brawer, Katharina Von Der Wense, Alessandro Roncone

33rd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2024

arxiv /We show that eye gaze data and gameplay data can be used to accurately model a human teammates intentions and beliefs about an AI teammate in the game Overcooked.

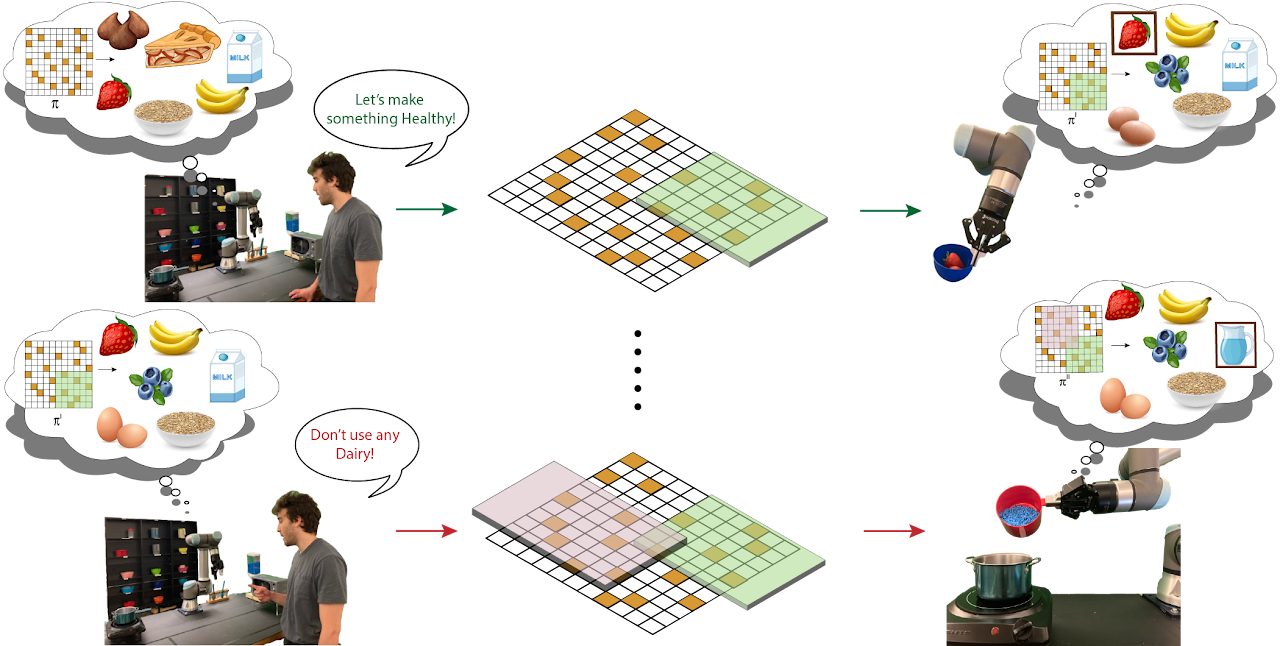

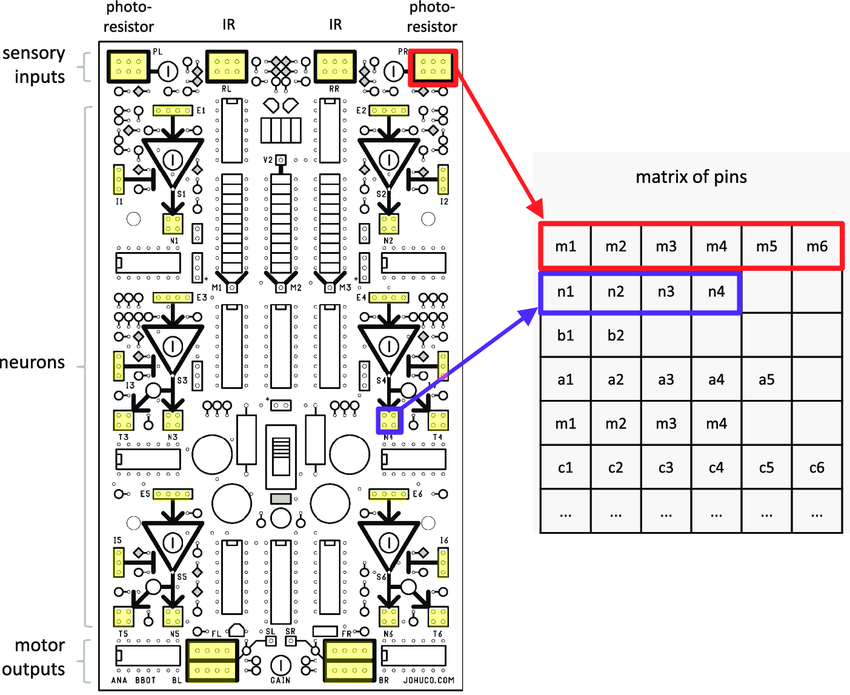

Interactive Policy Shaping for Human-Robot Collaboration with Transparent Matrix Overlays

Jake Brawer, Debasmita Ghose, Kate Candon, Meiying Qin, Alessandro Roncone, Marynel Vázquez, Brian Scassellati

ACM/IEEE International Conference on Human-Robot Interaction, 2023

paper / website /🏆 Best Technical Paper Award This work introduces Transparent Matrix Overlays, a novel framework that allows humans to shape a robot’s policy during execution using simple verbal commands. The approach aims to enhance both adaptability and explainability in human-robot collaboration.

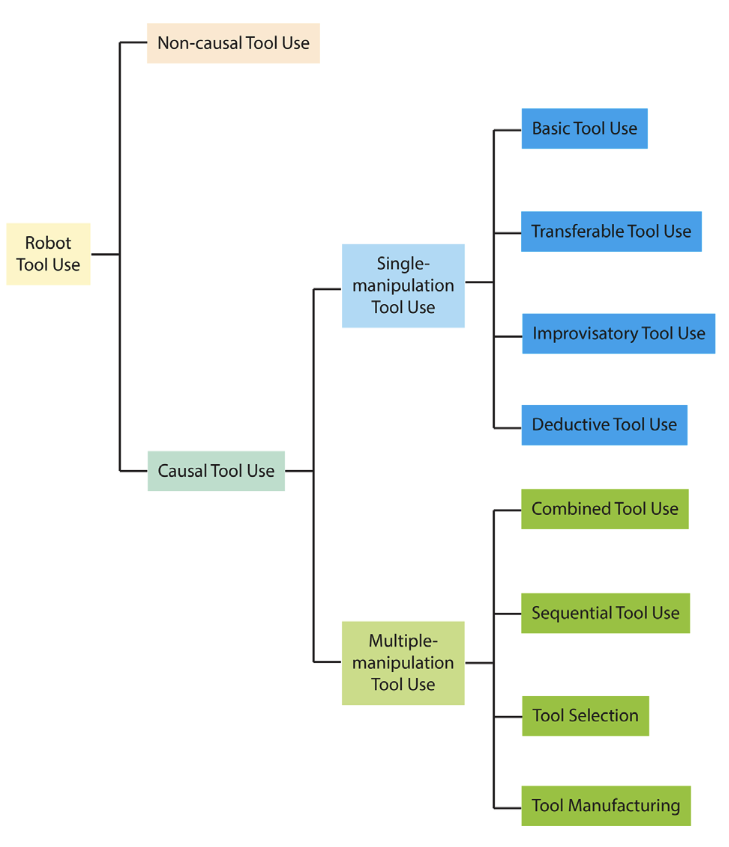

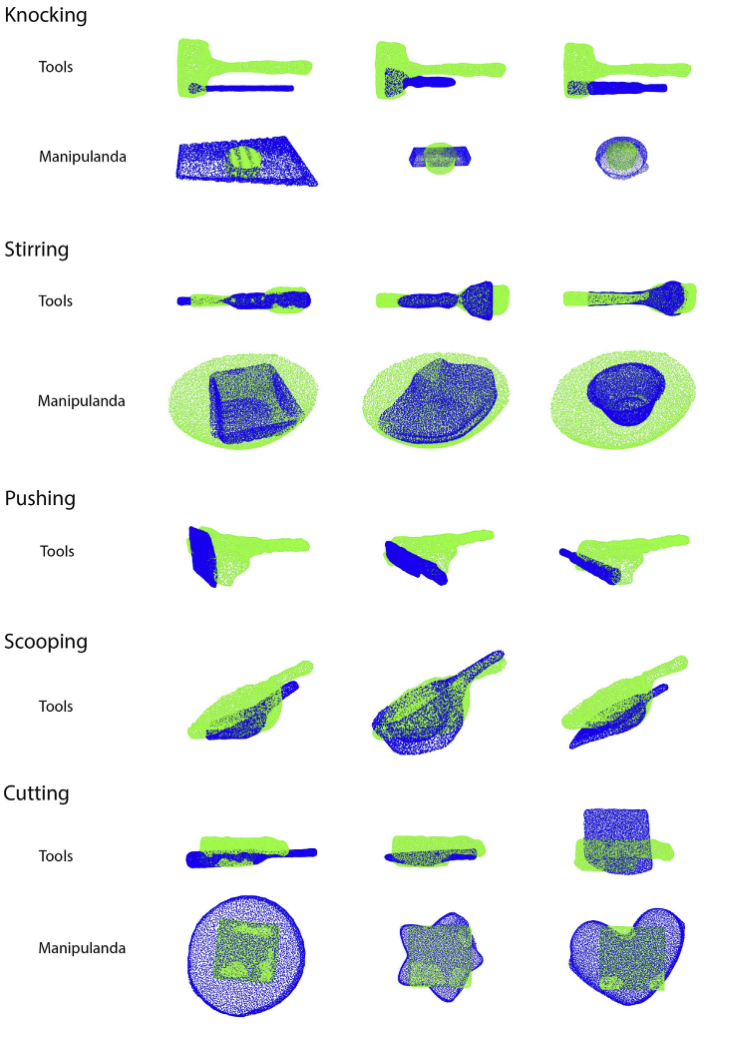

Robot Tool Use: A Survey

Meiying Qin, Jake Brawer, Brian Scassellati

Frontiers in Robotics and AI, 2023

paper /This survey identifies three critical skills required for robot tool use: perception, manipulation, and high-level cognition. It also introduces a taxonomy inspired by animal tool use literature, laying the foundation for future research and practical guidelines in robotic tool use.

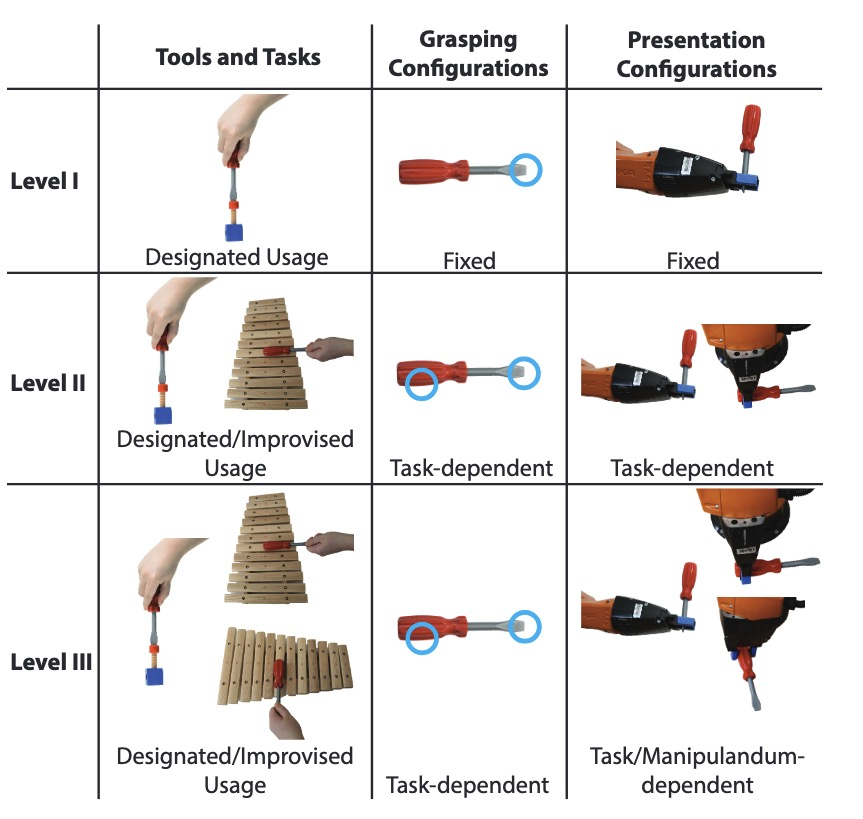

Task-Oriented Robot-to-Human Handovers in Collaborative Tool-Use Tasks

Meiying Qin, Jake Brawer, Brian Scassellati

2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2022

paper /This paper introduces a taxonomy of robot-to-human handovers and focuses on task-oriented handovers, where the object is passed in a way that facilitates immediate tool use. The proposed method trains the robot via tool-use demonstrations, enabling adaptable handovers even for novel tools and unusual usage contexts.

Rapidly Learning Generalizable and Robot-Agnostic Tool-Use Skills for a Wide Range of Tasks

Meiying Qin*, Jake Brawer*, Brian Scassellati

Frontiers in Robotics and AI, 2021

paper /Co-first authors: Meiying Qin and Jake Brawer contributed equally to this work. This paper presents TRI-STAR, a framework for teaching robots tool-use skills that generalize across tasks, tools, and robot platforms. With only 20 demonstrations, TRI-STAR learns adaptable and transferable tool-use strategies, enabling robots to solve diverse tasks using novel tools.

A Causal Approach to Tool Affordance Learning

Jake Brawer, Meiying Qin, Brian Scassellati

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020

paper / video /This paper presents a method for robots to build causal models of tool affordances through observation and self-supervised experimentation. By understanding cause-and-effect relationships, robots can generalize tool-use skills to novel tools and contexts with minimal training.

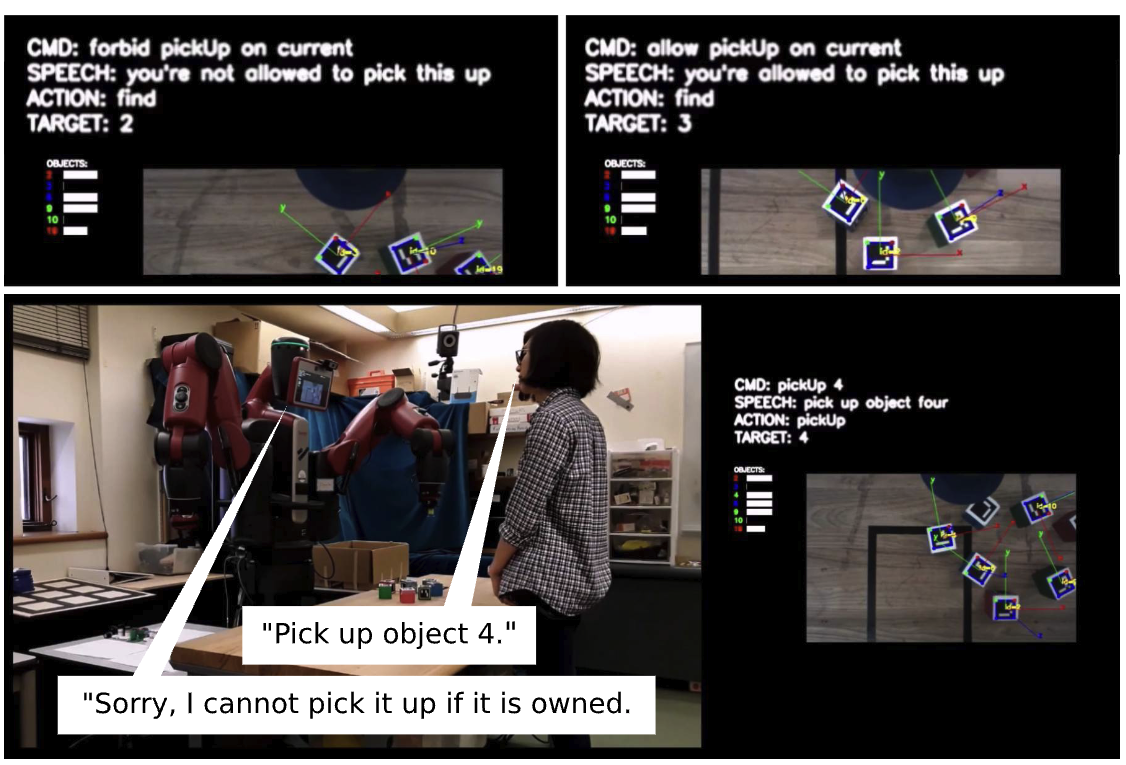

That’s Mine! Learning Ownership Relations and Norms for Robots

Zhi-Xuan Tan, Jake Brawer, Brian Scassellati

Proceedings of the AAAI Conference on Artificial Intelligence, 2019

paper /This paper presents a system for robots to learn and apply ownership norms through incremental learning and Bayesian inference. By combining rule induction, relation inference, and perception, the system allows robots to reason about ownership and follow social norms during object manipulation.

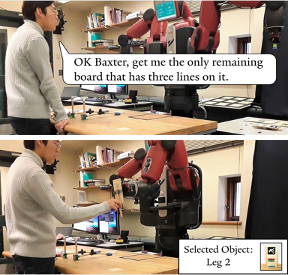

Situated Human–Robot Collaboration: Predicting Intent from Grounded Natural Language

Jake Brawer, Olivier Mangin, Alessandro Roncone, Sarah Widder, Brian Scassellati

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018

paper /This paper presents a framework for grounding natural language into robot action selection during collaborative tasks. By maintaining separate models for speech and context, the system incrementally updates its understanding of intent, enabling more fluent human-robot collaboration.

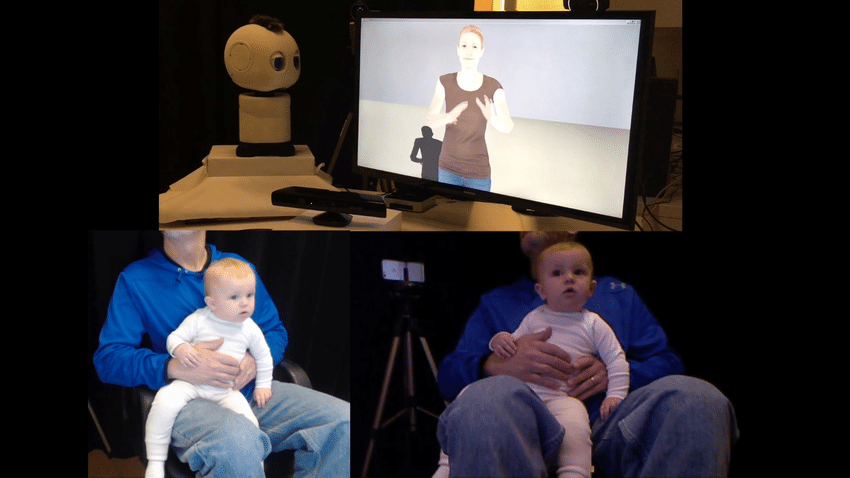

Teaching Language to Deaf Infants with a Robot and a Virtual Human

Brian Scassellati, Jake Brawer, Katherine Tsui, Setareh Nasihati Gilani, Melissa Malzkuhn, Barbara Manini, Adam Stone, Geo Kartheiser, Arcangelo Merla, Ari Shapiro, David Traum, Laura-Ann Petitto

Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, 2018

paper /This paper presents a multi-agent system combining a robot and virtual human to augment language exposure for deaf infants during critical developmental periods. The system provides socially contingent visual language, addressing the challenge of language acquisition in deaf infants born to hearing parents.

Epigenetic Operators and the Evolution of Physically Embodied Robots

Jake Brawer, Aaron Hill, Ken Livingston, Eric Aaron, Joshua Bongard, John H Long Jr

Frontiers in Robotics and AI, 2017

This paper investigates how epigenetic operators, alongside standard genetic operators like recombination and mutation, shape the evolution of physically embodied robots. Results show that epigenetic operators can lead to qualitatively different evolutionary outcomes, with some effects reducing adaptability compared to traditional genetic processes.